Announcing Helix 1.4 – CI/CD for Cloud-Native GenAI Apps

Helix test (evals) and a K8s Operator with a Helix + Flux reference architecture = GitOps for GenAI. Hardened scheduler, new long-context models. JIRA and multi-lingual RAG apps.

Since we launched our 1.0 in September, interesting things have been happening commercially, and also from a product perspective.

One of the big bits of feedback I got from talking to smarter commercial people than me like Matt Barker and Joey Zwicker was the importance of focusing on vertical use cases in specific industries. Horizontal platforms don’t work, they said.

So I completely ignored their advice, and took us in the direction of adding CI/CD and Evals to the product.

“Why did you do the wrong thing, Luke!?” I hear you asking.

Because CI/CD is foundational

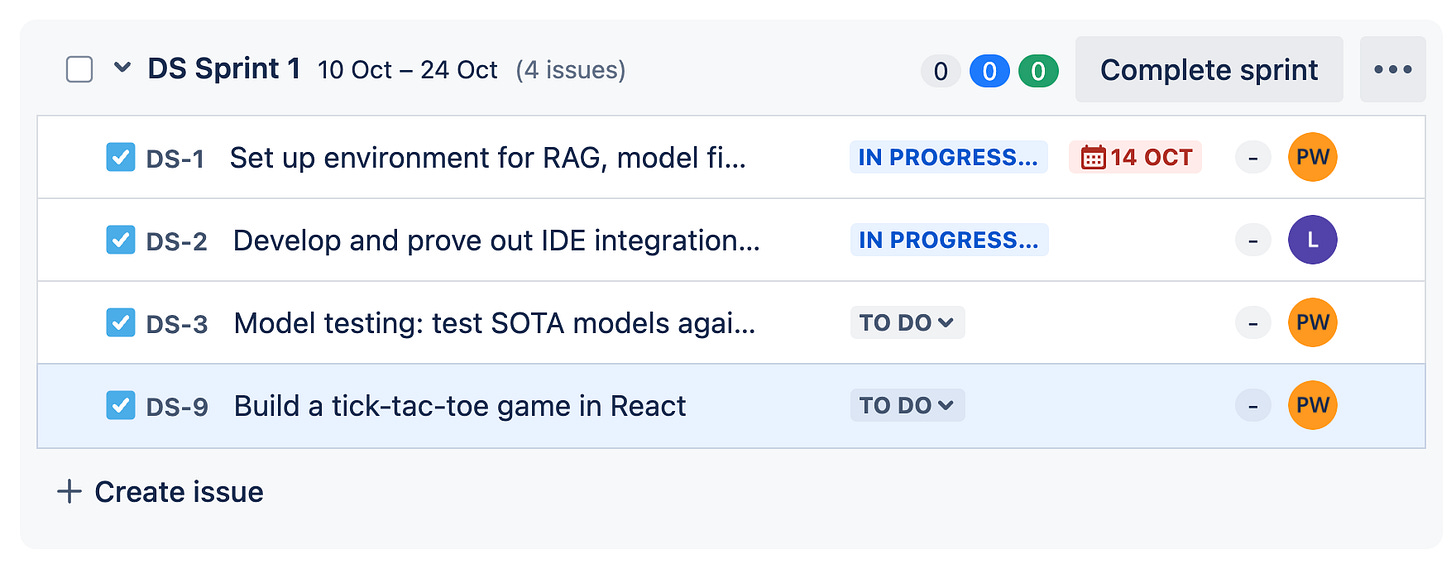

The opposing insight came from two data points: Firstly, the Saudi bank who pushed us to develop JIRA and multi-lingual RAG examples got us developing our own Helix Apps. And they gave us a nice list of features they wanted from the JIRA integration.

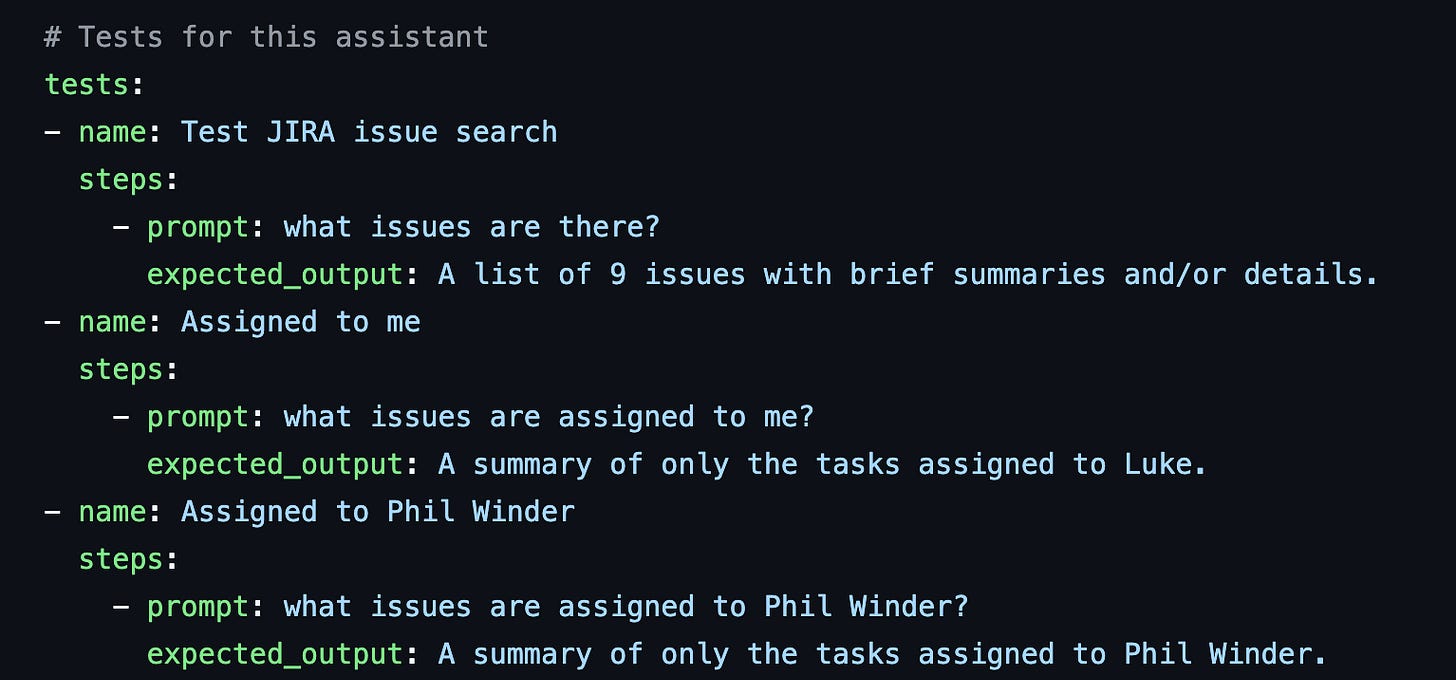

Each feature they requested very naturally formed a test case. And when developing the JIRA app, I was faced with the decision: manually test each case as I iterated on teaching Llama3.1 how to write JQL (the JIRA query language) through prompting, or take a step back and build our own lightweight testing framework:

Of course I couldn’t resist the rabbit-hole of solving our own problem by creating tooling. I’m a sucker for writing dev tooling, but sometimes it feels right.

The second data point is our earliest customer, AWA Network, and the fact that they started developing evals for their helix apps early on. Melike is one of their engineers, and in her own words:

"At AWA, we're developing Helix Apps to help our clients drive revenue, such as scaling their sales teams with natural language interface to product catalogs and other APIs and knowledge sources. We've been pioneering evals (tests) for our Helix Apps for several months. The evals are essential to ensure that changes we make to the model, the prompting, the knowledge or the API integrations make things better and don't regress expected functionality. You wouldn't ship software without tests, and you shouldn't ship AI Apps without evals! We're glad that Helix added evals (CI) and deployment (CD) support to the product, they've been very fast in developing the features we needed!"

– Melike Cankaya, Software Engineer at AWA Network

Yes, Melike, you’re right!

You wouldn’t ship software without tests, and you shouldn’t ship AI Apps without evals

Honestly, we saw the added complexity that implementing evals separately to Helix added for this team, and wanted to absorb that complexity into Helix itself as well as serving our own needs.

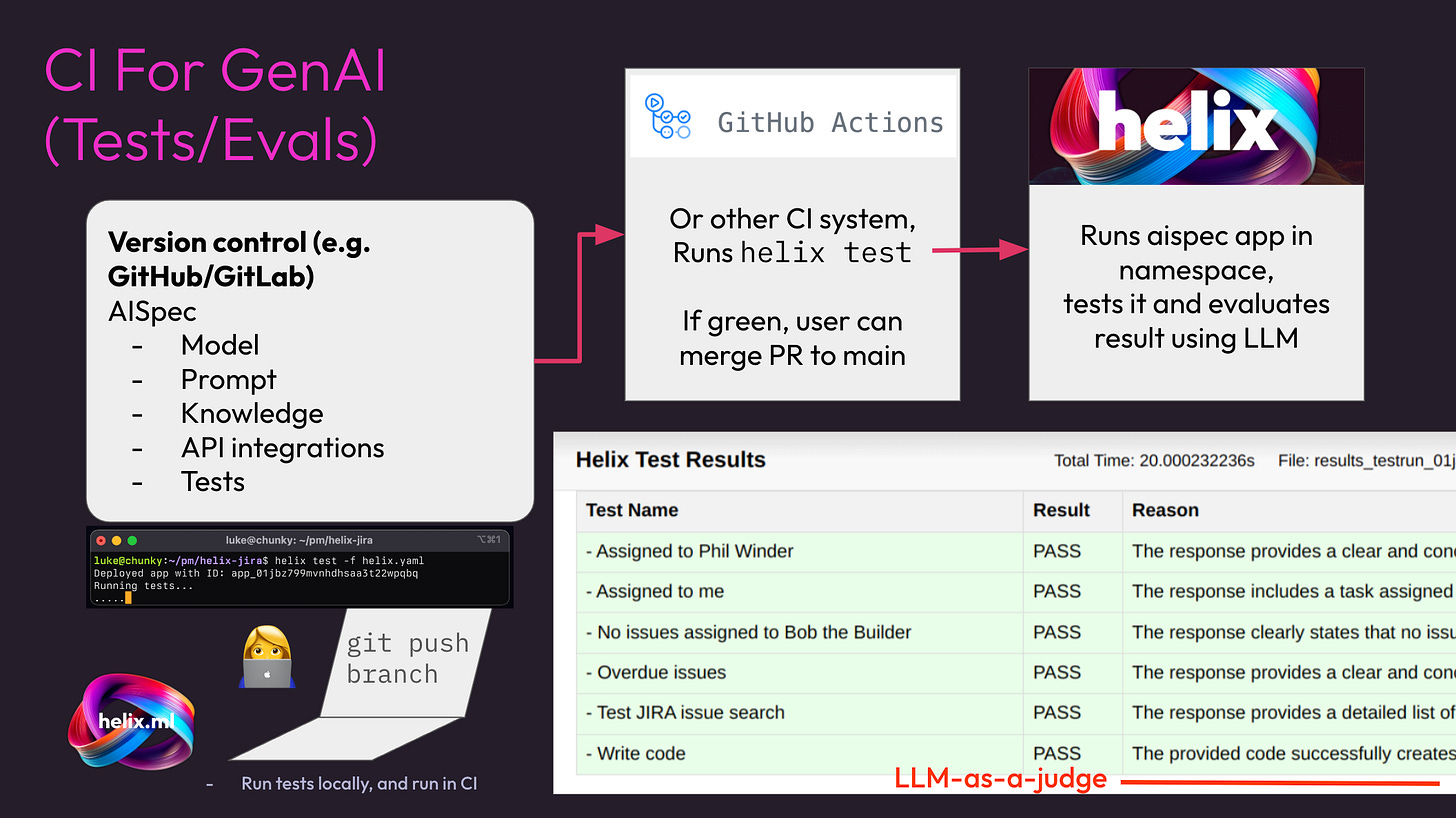

So, we put together the helix test command, and used it to develop our own JIRA integration. Check out my AI for the Rest of Us talk to see live-coding the tests for the JIRA app. See also the helix test docs, and example integrations with GitHub Actions and GitLab.

Yet another evals framework, really?

We’re not telling anyone they shouldn’t use generic evals frameworks, like DeepEval, Arise Phoenix or Braintrust (the latter two recommended by my friend Hamel Husain). Instead, the helix evals framework (helix test) is specifically designed for help you testing the iterations on a Helix App. Those iterations typically involve changing the prompting for an API integration, where the instructions we give the LLM impact on how it constructs API calls or summarizes their responses back to the user.

So having a tight loop from editing the prompts in the helix yaml file, to running the test, debugging the exact internal sequence of LLM calls, maybe changing a test bug, and so on, is super powerful. My colleague Phil Winder, who’s notoriously hard to please, even liked the tight integration from test results → debug button → exact internal LLM calls that were used to generate the result.

K8s operator & Flux support

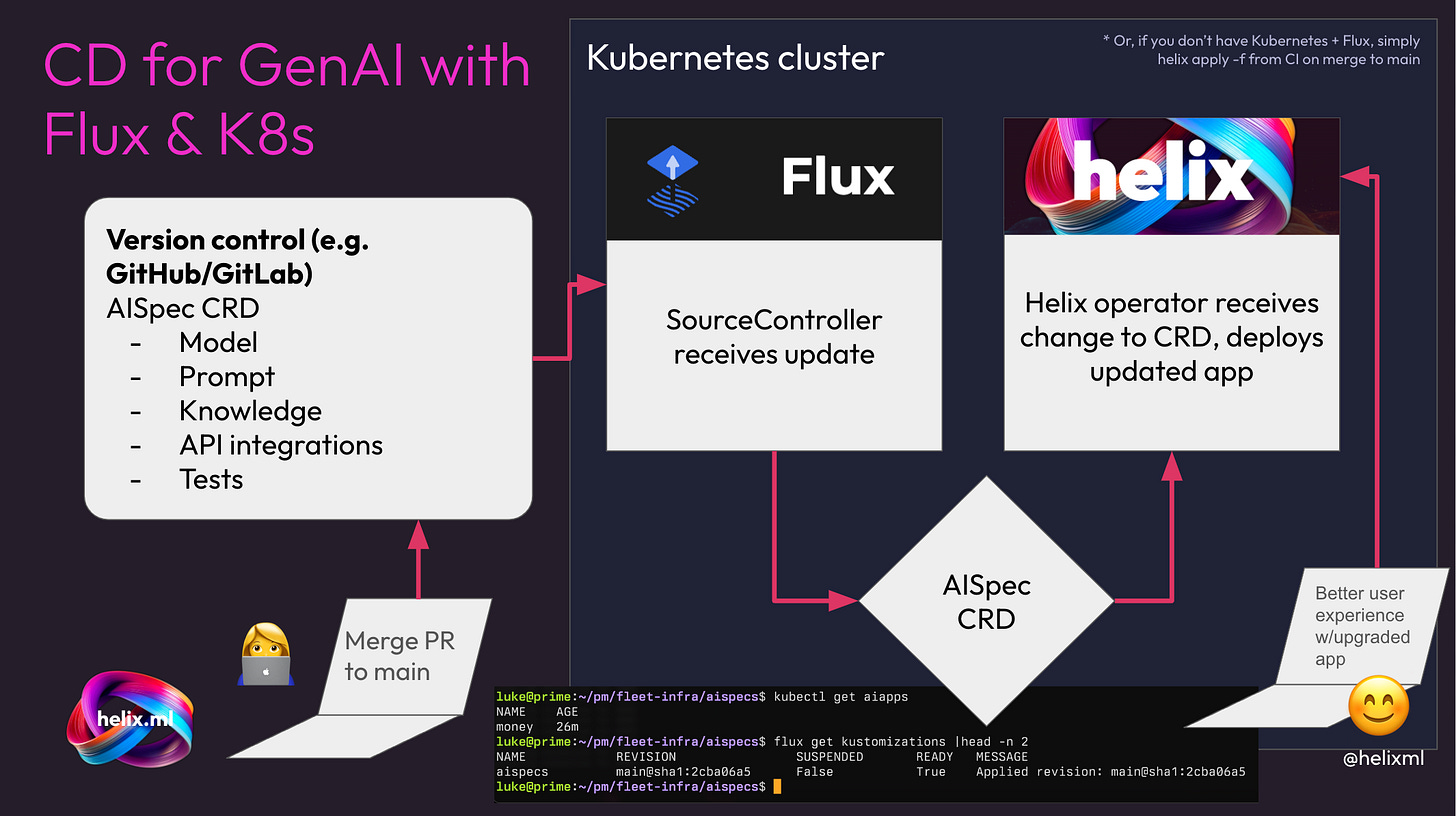

As well as the evals framework, which enables testing and therefore continuous integration (CI) for GenAI Apps, we developed continuous delivery support with Flux and the new Helix Kubernetes Operator.

We used the handy kubebuilder project to quickly scaffold our operator, and then implemented reconciliation logic from CRD aispec to the Helix API. Piece of cake! https://github.com/helixml/helix/blob/main/operator/internal/controller/aiapp_controller.go#L55

Fighting the go module system was the harder part ;-)

This means we now support a full continuous delivery flow where you push (or merge, in a PR) an AIspec compatible CRD like this one to the main branch, Flux automatically picks it up and deploys the change from Git to the cluster, and the Helix operator automatically reconciles it into your production deployment!

Here’s a full example walkthrough of the reference architecture.

Hardened scheduler

The previous Helix scheduler had a lot of the scheduling logic in the runner. The API server simply provided a filterable list of work to be done, and runners would randomly poll for work with appropriate filters (e.g. how much GPU memory could be made available as required). This seemed like a good idea in December 2023, when we were just getting started, but in practice it was impossible to make it totally reliable in the face of concurrent requests on high latency links (our control plane is in the UK, our GPUs in the US on a gig switch that often gets contended – perfect conditions for testing!).

However hard we tried, we’d still end up with over-scheduling from time to time. So Phil heroically rewrote the scheduler while the plane was flying (over a series of releases), gradually moving more and more state into the API, so now we have a much smarter, more reliable centralized scheduler in the controlplane. This opens the door to smarter scheduling strategies too.

Long context models

When we started building the JIRA integration for the Saudi bank, as well as not having easy evals to turn too, we had another problem. The model we were previously using with Helix, llama3:instruct, via Ollama, suffered from two short-context problems: Ollama’s OpenAI compatible chat completions API defaulted to a 2K context window. And even once we got past that, llama3 itself only supported 8K.

This bit us as soon as we started trying to use any non-trivial JSON responses from JIRA (which is rather verbose in its API responses). Phil valiantly updated our runner code to use the Ollama API instead of the OpenAI API (so as a side effect Helix now offers the only OpenAI compatible API to Ollama that supports non-trivial context lengths), and then I fought the demons that were upgrading ollama in our runners to support the latest models. We now support out of the box: Llama 3.1 and 3.2, Phi 3.5, Mixtral, Gemma2, Qwen2.5, Hermes 3, Aya, Mistral Nemo and more, all with long context windows.

We were surprised at how much increasing the context length increases the GPU memory usage (even with Flash Attention turned on), so we tuned the context lengths we use by default so that things fit in sensible sized GPUs. For the full set of models and context lengths we support, check https://github.com/helixml/helix/blob/main/api/pkg/model/models.go#L151

JIRA

We developed a JIRA integration using the evals framework, which you can find here: https://github.com/helixml/helix-jira

You can ask your JIRA chatbot: write me code for issue DS-9, and it will write a tic-tac-toe game in React!

Multi-lingual RAG

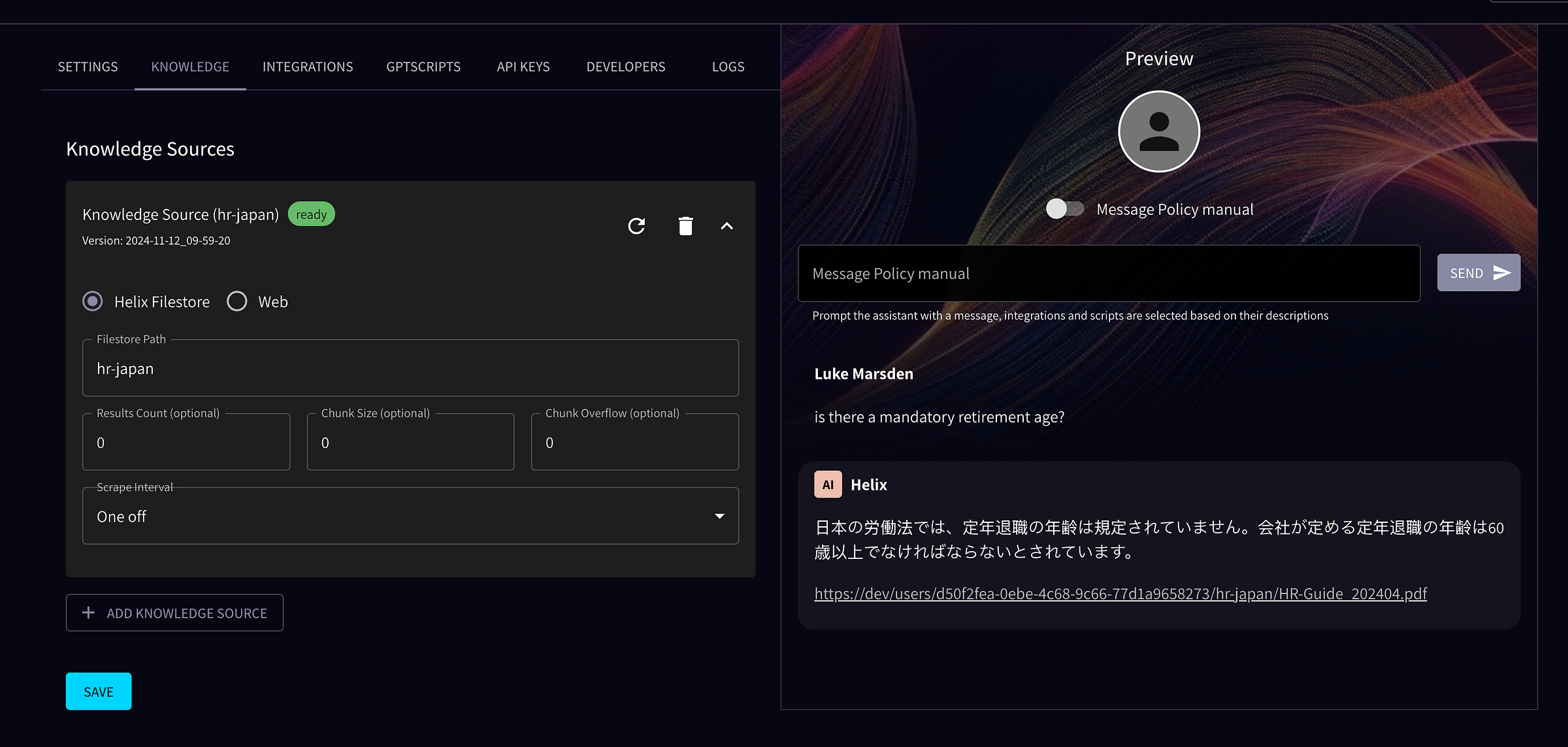

Have you ever wanted to talk to your HR handbook in Arabic or Japanese? Now you can:

Which Google Translate reliably informs me, translates to: “Japanese labor law does not specify a mandatory retirement age. The mandatory retirement age set by a company must be 60 years or older.”

Get in touch with us if you want a personalized demo: founders@helix.ml

Check out our revenue and pipeline commercial update, we can all be AI engineers vision blog, and the Helix 1.4 press release.

Resources

Docs for helix test: github.com/helixml/helix/blob/main/examples/test

Luke’s AI for the Rest of Us talk introducing and live demoing helix test:

CI/CD reference architecture: https://github.com/helixml/genai-cicd-ref and complete demo:

We’d love your feedback: Join us on Discord!