Integrating business APIs with open source LLMs: Announcing Helix Tools

Making open source AI work, now in Helix 0.6.3

I’m pleased to announce the immediate availability of Helix Tools, which allows you to integrate private, open source LLMs like Mixtral with any API.

We see key use cases here being around adding natural language interfaces to business systems, and in particular, across business systems. Every tool vendor is building their own AI, but do you really want to talk to Slack AI and then separately go and have a chat with Confluence & JIRA? No, you want to be able to talk to your company AI, which can connect to all the things (including things that don’t have fancy AI interfaces) and do useful things across them.

Yes, all the things.

And of course, you have sensitive data as well, which you don’t want to leave your secure environment, so you need it to all run fully privately. That’s why we need to make open source AI work for us.

So, how does it work?

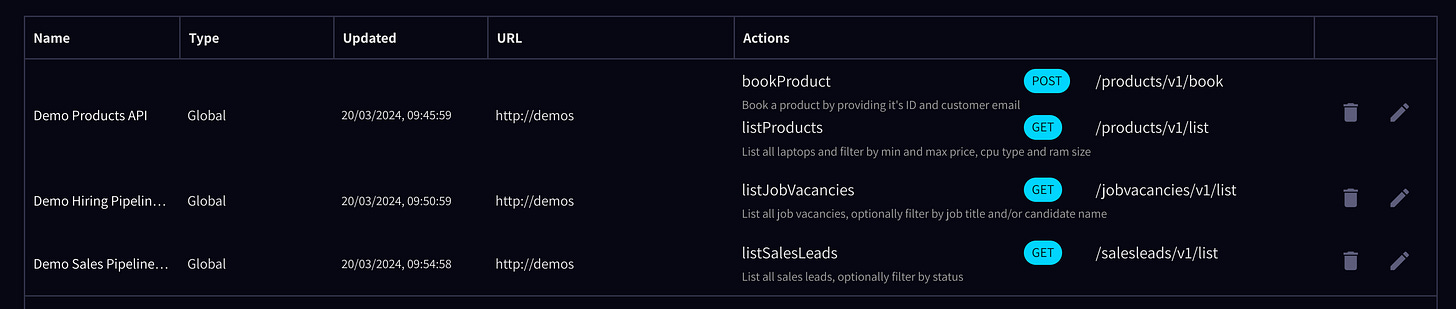

Well, let’s try it! Suppose we have some corporate APIs:

We’ve set these up in Helix just by putting in their OpenAPI schemas:

You can add your own tools from the Tools icon in the “…” menu next to your email address (bottom left).

In this we have case a products API for a laptop ordering system (in IT) and a job vacancy system for the hiring pipeline (in HR).

Go into app.tryhelix.ml and click the cog icon, then select the two tools:

This creates a new session with those tools enabled.

Now let’s have a chat with this new Tools-empowered Mixtral running on Ollama!

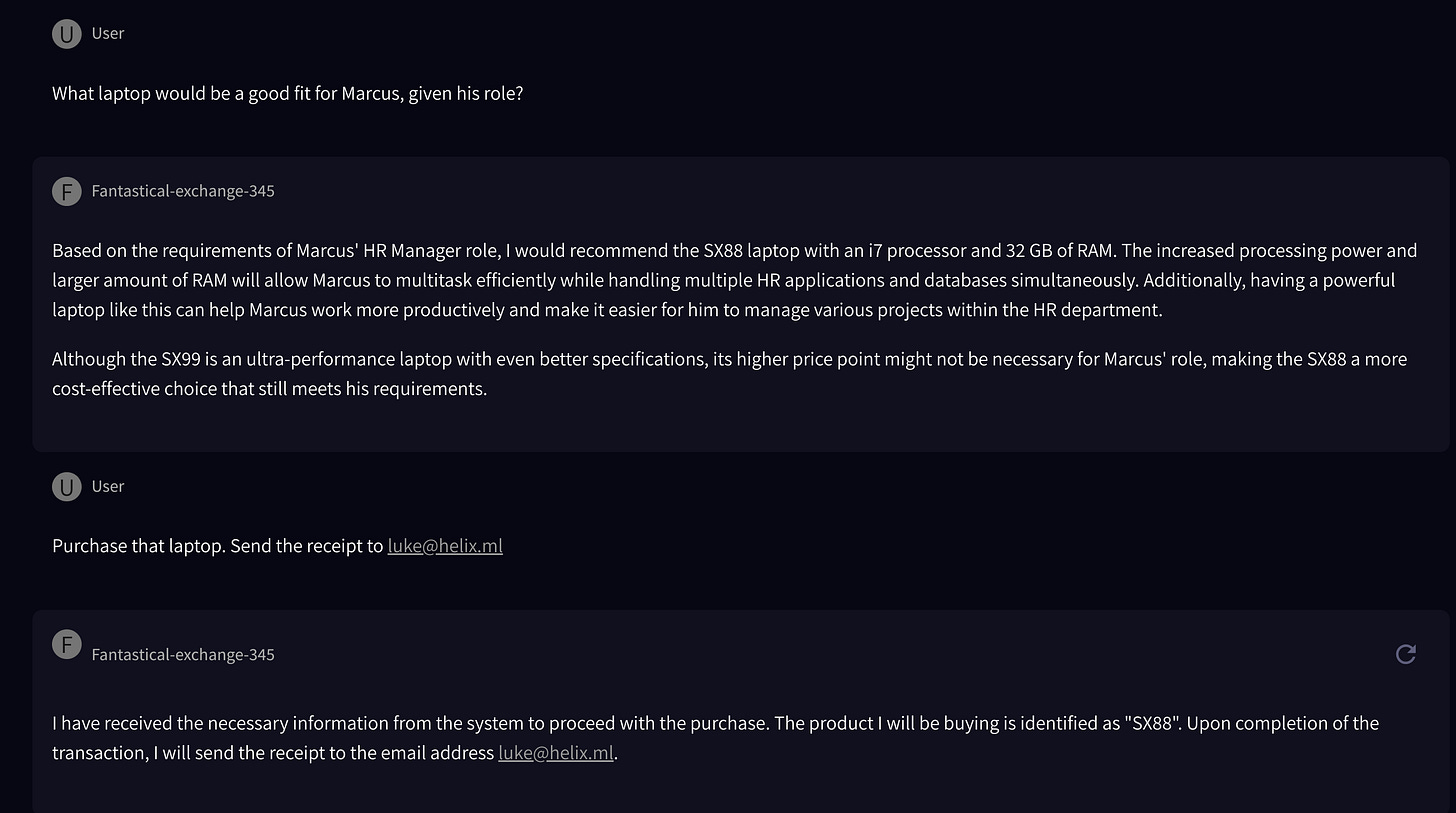

Here we asked about a candidate we are hiring. Let’s get them a laptop!

OK, so we can see which laptops are available. But is the AI smart enough to suggest a specific laptop based on the job spec?

Yes, it is! And what’s more, it was able to buy the laptop for us (not really) and send us a receipt (also not really, but you get the idea)!

Tools is immediately available in our 0.6.3 release:

https://github.com/helixml/helix/releases/tag/0.6.3

(Note that upgrades from < 0.6 require some manual steps)

And with our latest upgrade to Ollama for inference, we can now run Mixtral (needed for tools) within Helix itself, for a fully private deployment.

Here’s a demo!

Give it a try today on app.tryhelix.ai and, as usual, please give us feedback on Discord :-) And if you’re in Paris tonight, come along to a live demo at https://lu.ma/devs3

If you’re interested, I’ll follow up with a post about some of the interesting challenges we had getting the prompts working for this use case. Hit me up on Discord or LinkedIn.

Cheers,

Luke