Running Ollama server-side

How to run Ollama on a server and handle multiple requests to saturate the GPU memory

Hi folks, it’s been a month since we launched OpenAI compatible APIs, sorted out fine-tuning shenanigans and implemented various improvements to make self-hosting Helix as easy as possible.

In this article we would like to demonstrate you our second implementation of the model runtime which enables you to quickly add different models to your self-hosted AI platform.

Note: we still have the previous one (Axolotl based) and we are keeping it, just adding more to have better performance/model availability guarantees.

What is Ollama?

Ollama has been steadily gaining more and more traction. So, what is Ollama? Some people say that it’s just a wrapper around llama.cpp while others tout how it’s a lot more. I personally think it’s amazing and it saves you from the usual Python dependency hell.

Important parts of Ollama:

Can run on CPU as a fallback. This is actually great for testing or when you just don’t have the beefy GPU available.

It has a model registry from which you can pull models, similarly to how you would pull Docker images. This model registry:

Lots of models: https://ollama.com/library

All models have configured system prompts, tokenization instructions and chat templates

It has llama.cpp inbuilt so you can run models (this is the part that does the work)

Modelfile which describes what kind of tokenization the model has implemented, believe, you don’t want to do this yourself 😂

It has an API to pull models and also an OpenAI compatible API for chat completions

How does concurrency work with Ollama?

At the time of writing this article there are two main issues to resolve if you want to run models with Ollama concurrently:

Multiple concurrent requests will be executed sequentially

If a model is different, current one will be unloaded and new one will be loaded before inference

This is not ideal as if you have a graphic cards like Nvidia 3090, Nvidia 4090 RTX or more luxurious ones such as A100, H100 (or vintage editions such as V100) as more than one instance can fit of popular models such as Mistral 7B.

How to better utilise your GPU machine?

The easiest way to fully (or as much as you can based on the models you use) is to check how many models you could fit into your machine and start multiple Ollama instances. Once you have them running, configure an HTTP proxy such as Caddy or Nginx in front of them to load balance the traffic.

If you need to have an option to use different models, you will need to add this logic to the proxy so you route same model requests to the Ollama instance that has this model already loaded.

With any luck you will be saturating your machine’s GPU.

Is there an easier way?

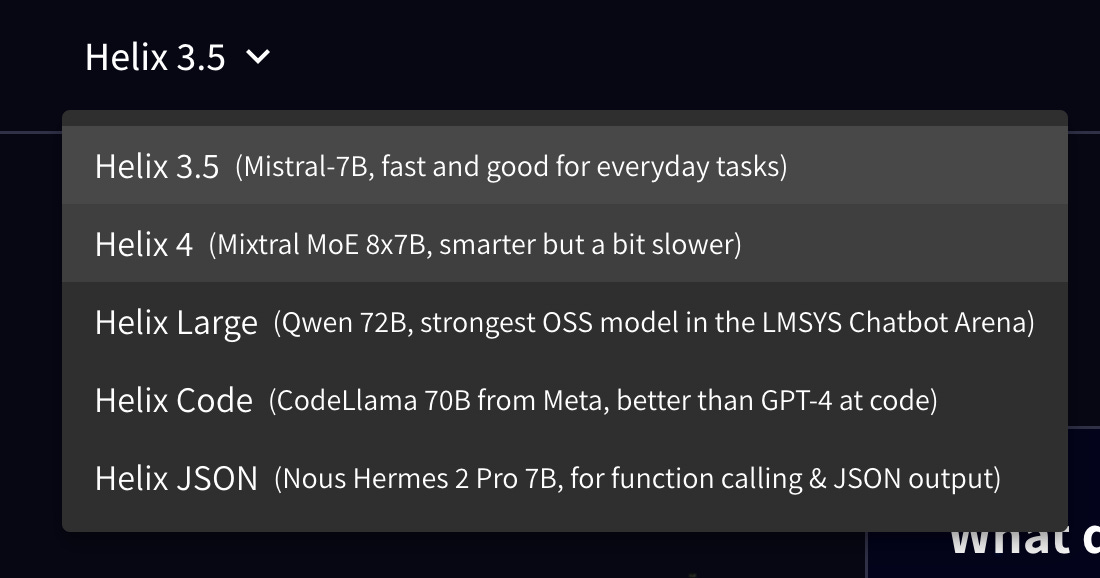

We have just added Ollama integration into Helix. For us, it gives an ability to support many more models and enable new ones when they come out:

What Helix does on top of just using the Ollama as runtime:

Enables you to run multiple concurrent Ollama instances to saturate available GPU memory. It works based on the available memory so if you provide less memory than you have, you can also run something else on a side.

Helix routes traffic to already running instances so there’s no time wasted on unloading/loading the model

Preserves conversation history

Next steps (inference runtimes)

While doing the migration from Axolotl only runtime, Ollama is not our last stop. Helix will move forward and also include vLLM as an option.

The drill is the same though, multiple vLLM instances running side-by-side, managing memory and loaded models. Advantages of using of enabling it:

Best throughput out there

Continuous batching of incoming requests - this is where we will control concurrency also from within the running model instance.

Cons:

It’s not as forgiving as Ollama when it comes to hardware discovery 😬

How to run it yourself

You can run Helix yourself, as long as you have an internet connection. Check out instructions here https://docs.helix.ml/helix/private-deployment/.

If you encounter any issues, feel free to join our Discord channel and ping us!