Technical Deep Dive on Streaming AI Agent Desktop Sandboxes: When Gaming Protocols Meet Multi-User Access

When we started building sandboxes for AI agents at Helix, we wanted to give each agent their own desktop environments that we could stream interactively to users’ browsers. Not just static screenshots - full interactive desktops where agents could browse the web, write code, and use tools, in collaboration with their human colleagues. We looked at VNC, RDP, and various browser-based solutions, but kept coming back to Moonlight.

Moonlight is a game streaming protocol, originally designed to stream PC games to your couch. It’s fast, efficient, and works beautifully over sketchy network connections. There was just one problem: it was built for single-player gaming, and we needed multi-user agent access.

This is the story of how we bent a gaming protocol to our will, and why we’re still working through the consequences.

Why Stream Desktops for AI Agents?

Most AI coding assistants live in your IDE or terminal. But what if your agent needs to actually see the screen, click buttons, navigate UIs? What if you want to watch your agent work in real-time, or collaborate with it in a proper IDE? And what if you want your agent to be able to run on the server while it does all of this, so it can benefit from a good network connection while you open and close your laptop in cafes, on the train, even on the beach?

That’s what we’re building with Helix Code. We run full Linux desktop environments in containers, each with a GPU attached. Inside each desktop runs an AI agent with access to development tools - Claude, code editors, browsers, terminals. Users connect to watch and interact with these agents as they work. And also get a 30,000-foot view of their fleet of agents, because we’re all going to become managers of coding agents whether we like it or not.

The challenge: how do you efficiently stream these GPU-accelerated desktops to browsers and native clients, with low latency, across variable network conditions?

Enter Moonlight (and Wolf)

The Moonlight protocol was originally created by NVIDIA for their GameStream technology. It’s designed to stream high-framerate, low-latency video from a gaming PC to another device. Think playing Cyberpunk on your iPad from your gaming rig upstairs.

We use Wolf, a C++ implementation of the Moonlight server that runs in containers. Wolf exposes the Moonlight protocol, and clients can connect using Moonlight-web in the browser or native Moonlight clients on Mac, Windows, Linux, Android, iOS.

The setup is elegant: Wolf manages Docker containers with GPU attachment, Moonlight handles the video streaming, and we get hardware-accelerated desktop streaming working smoothly over 4G.

There’s just one catch.

The Protocol Mismatch

Moonlight was designed around a simple mental model: one user, streaming one game at a time. You connect, you launch Steam, you play. You can disconnect and reconnect, and your game is still running. But each client gets its own instance.

Here’s where it breaks for us:

Moonlight expects: Each client connects to start their own private game session

We need: Multiple users connecting to the same shared agent session

In Moonlight’s world, if two clients try to start Steam, they each get separate Steam instances. That’s great for gaming - you wouldn’t want your roommate’s controller inputs affecting your game.

But for us, if two people connect to the same AI agent, we don’t want two separate agent instances. We want them both watching and potentially interacting with the same agent doing the same work. The agent has identity and state - it’s logged into services, it has files open, it’s in the middle of tasks.

The semantics just don’t match.

Apps Mode: Our First Workaround

In “apps mode” (standard Moonlight protocol), Wolf creates containers on-demand when the first client connects. This presents another problem: when does the agent actually start?

We want agents to start automatically when users drag tasks onto a Kanban board, or when the system kicks off autonomous work. We can’t wait for someone to connect with a browser before the agent starts running.

Our solution was a bit of a hack: the Helix API pretends to be a Moonlight client.

When Helix starts a new agent session, it makes a WebSocket connection to Moonlight-web, pretending to be a browser. It initiates a “kickoff session” that starts the container and establishes fixed video parameters (4K, 60fps). Then it immediately disconnects.

Now the agent is running, the desktop is up, and real users can connect to it.

But we still have the multi-client problem. If someone connects with an external Moonlight client and starts an agent, they get a completely separate container from the one running in the browser. You end up with multiple “Zed” IDE instances, all thinking they’re the same agent, all trying to stream back, treading on each other’s toes.

Apps mode is stable, but it’s fundamentally single-user.

Lobbies Mode: The Real Solution

Wolf recently added “lobbies mode” - a feature explicitly designed for multiplayer gaming scenarios. Split-screen gaming, multiple controllers, shared screens.

This is exactly what we need.

In lobbies mode:

You start a lobby through Wolf’s API

The container starts immediately (no need for our kickoff hack)

Multiple clients can connect to the same lobby

Everyone sees the same screen

Screen resolution is pre-configured, not determined by the first connecting client

We’re currently migrating to lobbies mode. It solves our fundamental architecture problems:

Multiple users can connect to the same agent

Agents start without any client connection needed

Browser and native clients can connect to the same session

We can delete all the kickoff session complexity

The Current Reality (And Remaining Bugs)

Lobbies mode is still being stabilized. A few weeks ago it had memory leaks and stability issues. The Wolf maintainer has done heroic work making it production-ready, but we’re still ironing out bugs:

Input scaling is broken: When you connect with a different screen resolution than the lobby was configured for, Wolf rescales the video correctly, but mouse coordinates scale wrong. Click where you see a button, hit somewhere else entirely.

Video corruption on some clients: Connecting from Mac sometimes results in corrupted video streams. Still debugging.

Resolution flexibility: In apps mode, each client could negotiate its own optimal resolution. In lobbies mode, we pre-configure the resolution when creating the agent. We let users choose (including “iPhone 15 vertical” because streaming to phones would be cool), but it’s less dynamic.

We’re running apps mode for development right now because it’s stable, even with its limitations. But lobbies mode is the future.

What This Looks Like In Practice

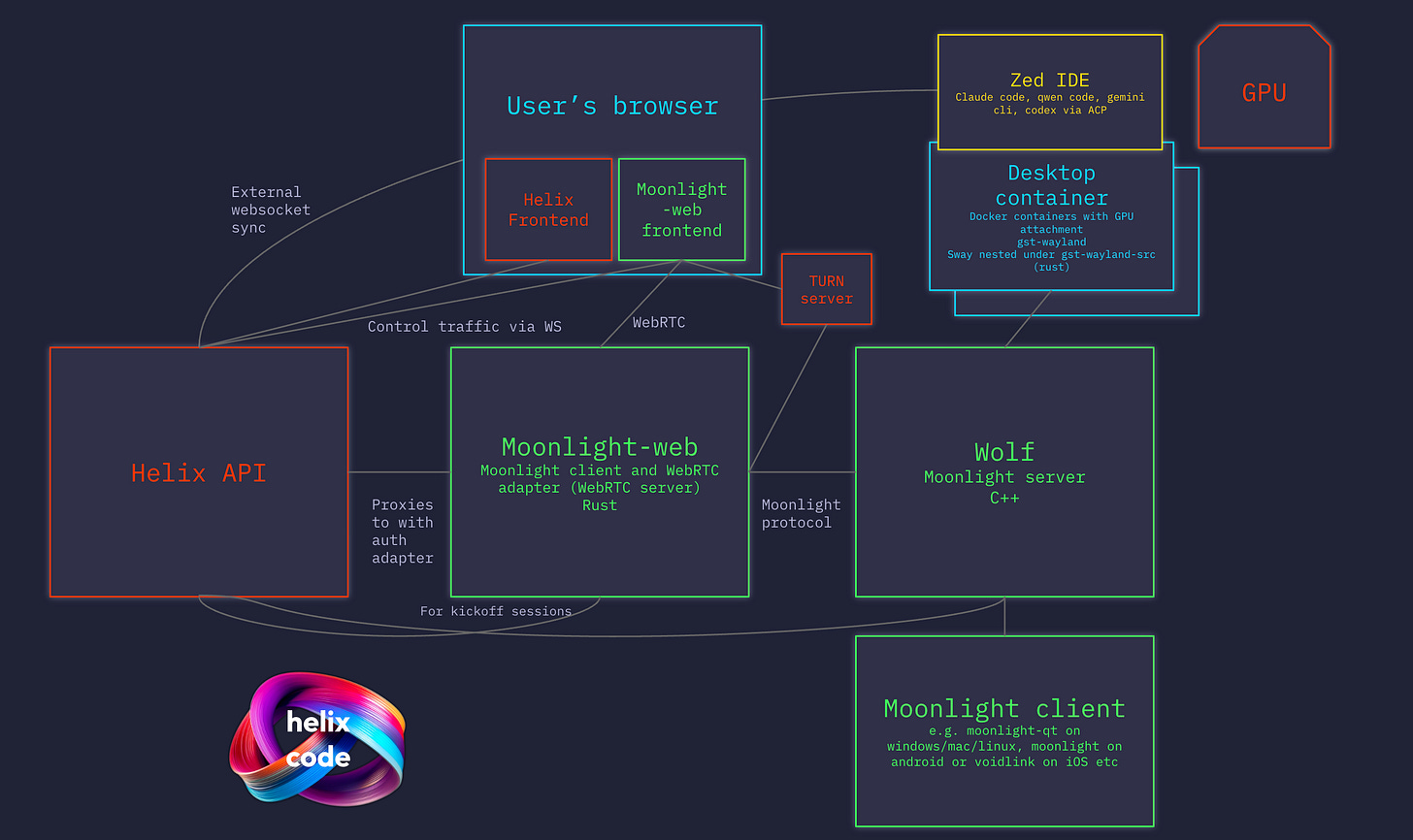

Here’s the architecture:

Helix API: Manages agent sessions, talks to Wolf to create/destroy containers

Moonlight-web: WebRTC adapter that bridges browser clients to Moonlight protocol

Wolf: Moonlight server running in Kubernetes, managing GPU-attached containers

Desktop containers: Sway (Wayland compositor) running on gst-wayland-src, with full desktop environment

External clients: Native Moonlight clients on Mac/Windows/Linux/iOS/Android

The video stream uses WebRTC from browser to Moonlight-web, then Moonlight protocol from there to Wolf. Control signals (connection & encryption setup) flow through websockets. Wolf handles GPU attachment and video encoding. The desktop runs real GUI applications in GPU-accelerated Wayland, not VNC or RDP forwarding.

You can watch an AI agent browse the web, write code in a real IDE, run commands in a real terminal, all streamed to your browser with gaming-grade latency.

Why This Matters

Streaming protocols matter a lot when you’re building visual AI agents. The latency, video quality, and network resilience all affect the user experience. Moonlight gives us:

Low latency: 50-100ms typically, works over 4G

Hardware encoding: GPU-accelerated H.264/H.265

Network resilience: Designed for unreliable wireless

Multi-platform: Works everywhere without custom apps

Mature protocol: Battle-tested by millions of gamers

But we had to work within constraints designed for different semantics. Gaming protocols assume private, single-user sessions. AI agents need shared, multi-user sessions. The impedance mismatch creates real engineering challenges.

What We Learned

Protocol assumptions run deep: Even when a protocol is technically capable of what you need, the assumptions baked into the design can bite you. Moonlight’s one-app-per-client model is fundamental.

Workarounds compound complexity: Our kickoff session hack worked, but added a whole layer of complexity. Sometimes you need to wait for the right feature (lobbies) rather than building around limitations.

Multiplayer gaming has solved this: The gaming community has already solved shared-screen streaming. We just needed to find the right mode and wait for it to stabilize.

Open source saves the day: Wolf’s maintainer added lobbies mode based on real user needs (ours included). Being able to work directly with the developer and contribute back is why we love open source infrastructure.

What’s Next

We’re actively migrating to lobbies mode. Once we fix the input scaling and video corruption bugs, we’ll have proper multi-user agent support. At that point, you’ll be able to:

Connect with native Moonlight clients to watch agents work

Have multiple people viewing the same agent session

Remove all the kickoff session complexity from our codebase

Support mobile clients properly with pre-configured resolutions

If you’re building anything involving desktop streaming, especially for non-gaming use cases, check out Wolf. And if you’re curious about Helix Code or want to try streaming AI agent desktops, join our private beta via our Discord.

Oh, and here’s proof it can stream 4K video way nicer than RDP or VNC!