You should run local models: run Deepseek-R1 on Helix 1.5.0 now

Chinese Hedge Fund crashes US stock market by releasing Deepseek-R1, and what this means for your enterprise GenAI strategy

So Deepseek-R1 crashed the US stock market on Monday, and today is Wednesday, so my hot take is going to be a bit lukewarm now. Sorry about that, but in my defence I was waiting for some docker images to build…

Deepseek-R1 are clever fuckers 🍿

Well I’ve got my popcorn out for this well-orchestrated economic war that’s going on. Hats off to the Chinese hedge fund that created Deepseek (presumably) opening a massive leveraged short on NVDA and then managing to coincide being top of the Apple App Store, and getting coverage on CNBC and Bloomberg to pop the bubble on NVDA valuation by releasing an MIT-licensed reasoning model that’s competitive with OpenAI with (apparently) much lower training costs - that’s a damn clever way to fund your business!

State of the art reasoning on my old 3090

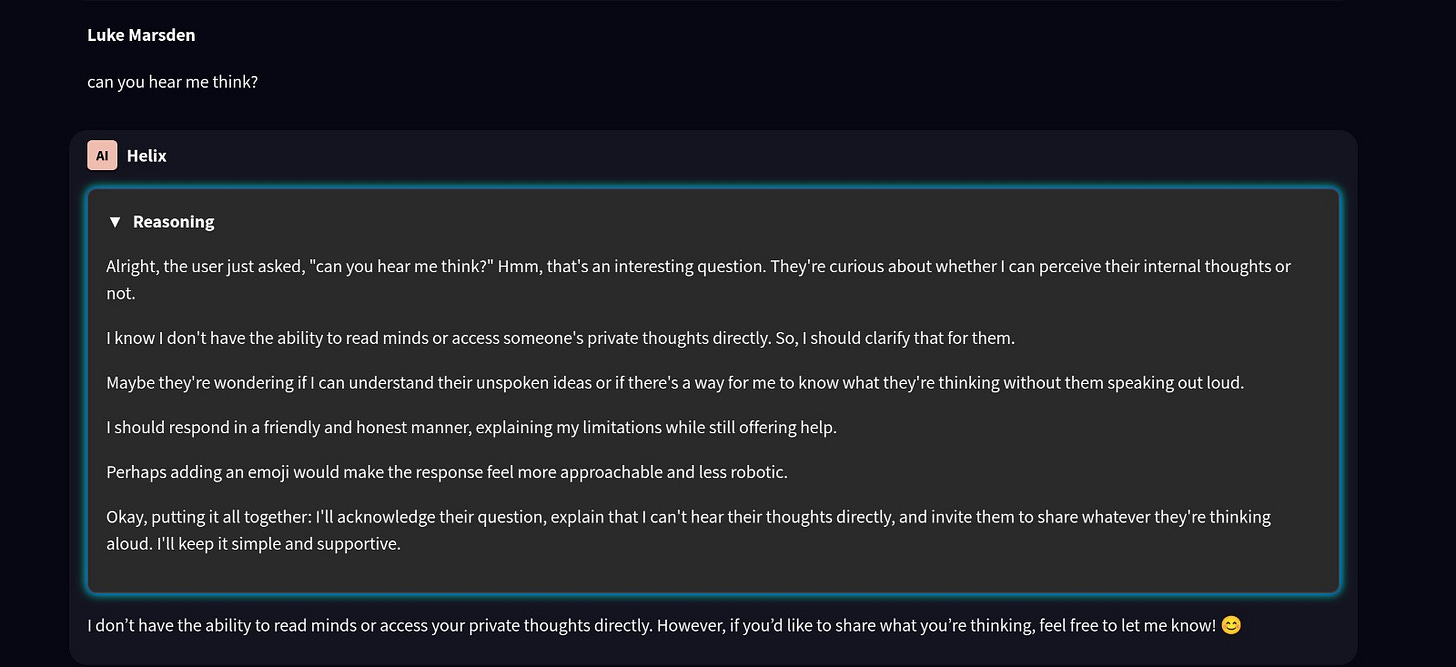

But it works. Starting with Helix 1.5.0, you can now run Deepseek-R1 in Helix on an ancient 24GB NVIDIA 3090 from 2020. We selected the 8B and 32B options because they fit nicely in consumer hardware at 8bit quantization (a good tradeoff between lobotomization and memory requirements):

And the 32B is not far off the massive 671B one in the benchmarks:

So now you can count the number of ‘r’s in ‘strawberry’ on your own GPUs. And more importantly, do state-of-the-art reasoning on knowledge and API integrations with Helix on your own secure infrastructure.

Why this matters for your enterprise GenAI strategy

I met with a large financial institution in the City of London on Monday who have gone all-in on proprietary models, having spent literally months getting OpenAI through security and compliance.

The guy was literally trying to get Deepseek-R1 running on his H100s the very morning I met them.

Why?

Because for the first time, there's an open model that's legitimately better than what they can get from the tech giants - Microsoft, Google, and Amazon. After spending months wrestling with Azure's security maze to get access to GPT-4 (apparently requiring some Microsoft back-channel voodoo since OpenAI only deals directly with their own customers), they're now seriously considering running models locally.

And it's not just about dodging cloud costs or compliance headaches. The really interesting question they raised was about longevity: will open source models like R1 maintain their edge over proprietary ones for weeks, months, or years? This isn't just academic - it's going to fundamentally shape their infrastructure investment strategy.

There's another angle too: reasoning models actually get better the longer you let them run, which makes cloud hosting eye-wateringly expensive. Running these locally starts looking pretty attractive when you're doing heavy-duty quantitative research or engineering work that needs sustained model performance. The potential to automate meaningful quant work at scale? That's the kind of thing that makes financial institutions sit up and take notice.

Get it while it’s hot

https://github.com/helixml/helix/releases/tag/1.5.0

Deepseek-R1 is now baked into the -large image of Helix 1.5.0. Check out how to deploy it on your own infra here:

https://docs.helix.ml/helix/private-deployment/controlplane/

As ever, join our Discord or just email founders@helix.ml to chat to us!